Hi, I am Gregor Hohpe, co-author of the book Enterprise Integration Patterns. I work on and write about asynchronous messaging systems, distributed architectures, and all sorts of enterprise

computing and architecture topics.

Hi, I am Gregor Hohpe, co-author of the book Enterprise Integration Patterns. I work on and write about asynchronous messaging systems, distributed architectures, and all sorts of enterprise

computing and architecture topics.

Find my posts on IT strategy, enterprise architecture, and digital transformation at ArchitectElevator.com.

Queues are key elements of any asynchronous system because they can invert control flow. Because they can re-shape traffic, they enable us to build high-throughput systems that behave gracefully under heavy load. But all that magic doesn't come for free: to function well, queues require flow control. Time for another look at the venerable message queue.

After developing a notation and vocabulary to express control flow, we can describe queues from a new dimension: time. We mentioned arrival and departure rates in the previous post: rates represent the first derivative of a variable over time. Hence, a message arrival rate describes how many messages arrive in a specified interval, e.g., per second. That arrival rate, in turn, varies over time and helps describe the dynamic behavior of any system. For example, peaks in the arrival rate are the typical cause of system overload. Also, the rate at which the arrival rate increases (the second derivative) defines how quickly a system has to scale up to respond to sudden load spikes (to underline its relevance, AWS Lambda just improved that metric by up to 12x).

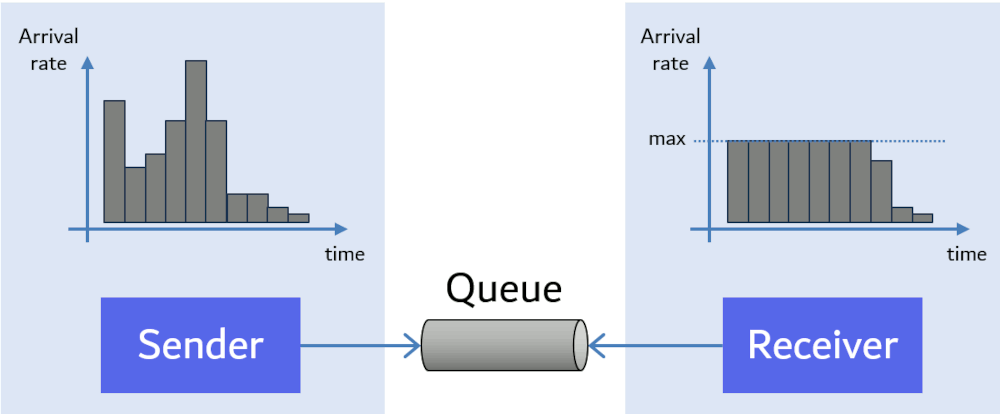

Plotting arrival rates over time provides a great way to depict the power of queues. Queues can flatten that message rate curve, also known as Traffic Shaping in networking circles:

We see an uneven arrival rate of messages on the left, which turns into a nice and steady processing rate at the right. Naturally, when the arrival drops below the processing rate, the queue shrinks and once it is empty, the processing rate tracks the arrival rate as long as the latter is lower than the former. If the arrival exceeds the processing rate, the queue springs back into action. Many real-life queues allow receivers to process messages in batches instead of one-by-one, but we'll park that aspect for later.

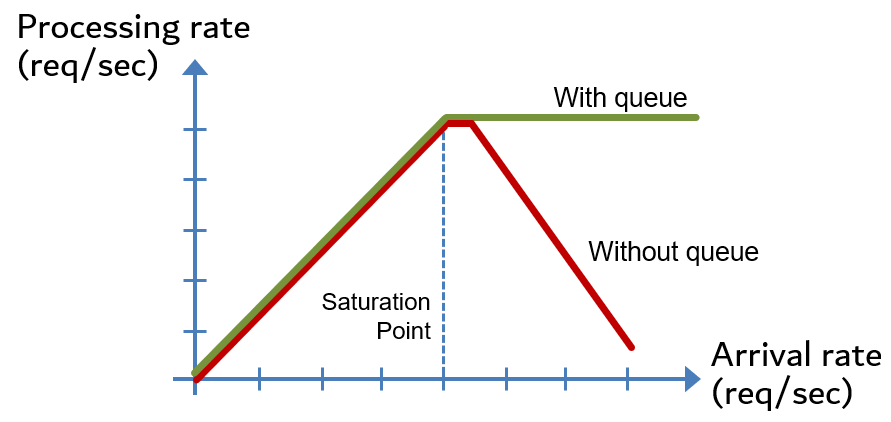

The great advantage of traffic shaping is that it's much easier to build and tune a system that handles the traffic pattern on the right than one that handles the traffic on the left. Specifically, systems that queue requests and process them using a worker pool behave more gracefully under heavy load: response times will increase, but the system is less likely to collapse under its own weight thanks to constant processing rates. In contrast, synchronous systems can get so busy accepting new requests that they no longer have resources available to process existing requests. The sad result is that as load increases beyond a certain threshold, system throughput actually decreases, as shown in the diagram. It's easy to see that this isn't a good thing.

That's why queues are widely revered as a "buffer" to protect a system from the noisy and spiky world of flash sales, marketing campaigns, or denial of service attacks. Jamming messages into a queue at a high arrival rate is unlikely to overload the system. Meanwhile, the workers are chugging along at the optimal processing rate.

Now, as architects we know that there are no miracles in system architecture, and the same is true for queues. As any element, a queue has finite capacity. Usually that capacity is high in comparison to processing capacity (like worker nodes) because storing messages is cheaper than running threads. Nevertheless, it is finite. Interestingly, both Amazon SQS and GCP Pub/Sub state that there is no limit on the number of messages. "Unlimited" is a word that providers rarely use, but if we read carefully, "unlimited" just means that no explicit limit is set, so it doesn't equate to "infinite". We get a hint of that nuance when reading up on Lambda function limits:

Each instance of your execution environment can serve an unlimited number of requests. In other words, the total invocation limit is based only on concurrency available to your function.

Similarly, GCP's Pub/Sub doesn't limit the number of messages that are retained, but it does limit the duration:

By default, a Subscription unacknowledged messages in persistent storage for 7 days from the time of publication. There is no limit on the number of retained messages.

Even in the absence of a hard limit, letting a queue grow arbitrarily may not be a good idea. Little's Result, one of the fundamental equations of queuing theory, reminds us that the average wait time equals the number of customers in the system divided by the arrival rate. So, wait times goes up with queue length (Little's result assumes a stable system, one where the arrival rate doesn't consistently exceed the processing rate).

Long wait times will impact the user experience. Messages, such as product orders, may no longer be relevant if it takes a year until they are processed. So, your system may technically function, but it no longer addresses your users' expectations, a great case of "all lights are green, the system is down".

For a queued system to perform adequately under consistently high load, it requires some form of Flow Control.

Wikipedia describes the role of flow control as:

Managing the rate of data transmission between two nodes to prevent a fast sender from overwhelming a slow receiver.

As we saw above, a queue provides flow control by decoupling the control flow between sender and receiver. But to do so effectively, it ultimately requires additional flow control.

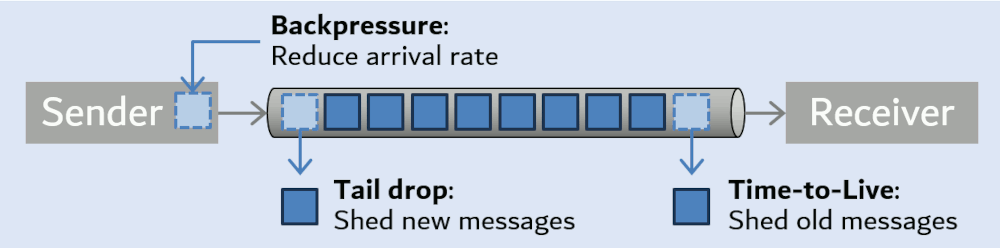

Three main mechanisms provide flow control to avoid filling a queue beyond its manageable or useful size and to keep the system stable:

Although neither option appears particularly appealing, implementing explicit flow control is much better than letting excess traffic take its course. For example, when the AWS Serverless DA team built their Serverlesspresso app, they found that their backend consisting of two baristas is easily overloaded by demand spikes for free coffee. They could queue requests, but learned that folks would not wait longer than a minute or two for their order to be completed. This led to wasted coffee plus a poor user experience as subsequent users would wait for the baristas to prepare coffees that were never picked up. So, the team resorted to back pressure to not allow new orders when the queue reached a specified size.

Dropping newly arriving messages may seem like a poor business choice—what if one of those messages includes a large order? In reality, though, insufficient flow control will lead to exactly the same effect: if an ecommerce website becomes overloaded, it will try to process orders already in the queue. Meanwhile, new customers can't place an order because the see an error message. Depending on the usefulness of the error message and the UI flow, such an error message could equate to backpressure, e.g., if the shopping basket is maintained for later order placement. Throwing a 500 error when users try to place the order is the very definition of tail dropping.

I am firm believer in learning about architecture from real life situations, so you will find all forms of flow control in many lines, e.g., those outside a popular club (we once waited over an hour to get into the then-famous Ghost bar on top of the Palms Casino in Las vegas). Customers will likely institute their own Time-to-live policy by eventually giving up. The bouncers may straight out-turn away arrivals in a tail-drop fashion. Or they may set up a secondary queue or holding place of sorts as a form of backpressure.

You'd expect the folks at the large cloud providers to know a thing or two about handling heavy load. Unsurprisingly, we can learn how AWS uses these mechanisms from an article authored by one of their senior principal engineers, even though they don't use the common names:

Popular message queue systems like RabbitMQ have built-in flow control to protect their queues:

A back pressure mechanism is applied by RabbitMQ nodes to publishing connections in order to avoid runaway memory usage growth. RabbitMQ will reduce the speed of connections which are publishing too quickly for queues to keep up.

Backpressure, TTL, and Tail Drop are the equivalent of a pressure relieve valve in that they engage once things are about to boil over. When you are designing a system that receives messages, you may know its limits upfront and avoid having to back off. That's why many cloud systems have explicit options to limit the delivery rate for push delivery. In a way, you are exerting constant back pressure to limit the rate of message arrival.

EventBridge Pipes has an explicit (fixed) rate limit for external APIs (HTTP calls), through the Invocation Rate Setting for API Destinations. It invokes Lambda functions directly through an asynchronous invocation without any explicit limit (we assume the built-in drivers have an algorithm similar to GCP). Other event sources like SNS use so-called Event Source Mapping, which uses its own poller (i.e. Driver), configured via maximum batch size (counted in messages) and maximum time (counted in seconds).

GCP Pub/Sub doesn't have an explicit setting, but relies on a Slow Start Algorithm that increases delivery speed as long as the downstream system handles the load (indicated by a 99% acknowledgment rate and less than one second push request latency). If the receiver starts to struggle, the sender eases off.

I found a quota of 5000 messages / second for Azure Event Grid push delivery, but I wasn't able to find how it backs off in case that it overloads downstream services. An article published on the Messaging on Azure Blog shows dynamic traffic ramp-up, but it's for pull delivery:

Understanding flow control allows us to build robust systems that include queues. We can summarize our key learning as:

Queues invert control flow but require flow control.

A vocabulary to describe the dynamic behavior of asynchronous messaging systems allows us to build reliable systems and makes a useful addition to the existing messaging patterns that mainly describe the data flow of messages.

You may enjoy the other posts in this series:

Share: Tweet

Follow:

Follow @ghohpe

![]() SUBSCRIBE TO FEED

SUBSCRIBE TO FEED

More On: INTEGRATION CLOUD EVENTS ALL RAMBLINGS